Integrating accessibility compliance into your software development process

What does it mean to “be compliant”?

Many people who work in software are not aware of the accessibility standards they need to meet for the products they build. In both the government and private sectors, there are standards surrounding web accessibility we are required to adhere to legally (not to mention, it’s the right thing to do). When many of us hear of these requirements for the first time, it can be really overwhelming!

table of contents for WCAG 2.0 - click to open in a new browser tab

Here at Truss, most of us work on government contracts, so we have targets we must hit to deliver on our client agreements. Most of the time, that means compliance to Section 508. At the time of writing (early 2023), this means meeting the Web Content Accessibility Guidelines (WCAG) 2.0 at the AA level. If you are new to the wide world of accessibility, looking into these rules and guidelines can be challenging. It’s tough to determine what applies to your project and what you actually need to do in your project: do you need to fix things? Run an audit? Where do you even start? To help alleviate some of that uncertainty, we have good primers for our team members and clients that we keep in our accessibility wiki.

At Truss, we have volunteer run working groups focused on specific topics to learn more and establish best practices for the organization. Our accessibility guild has worked together for over two years to figure out the best approach for tackling these issues and defining a process that feels actionable. A lot of these pieces have come together over time due to contributions from many Trussels, past and present.

Filling out an accessibility scorecard

Before you start testing and changing processes, it can be a good idea to take inventory of what you have completed so far and what resources you have available to you. To help standardize this assessment at Truss, we developed an Accessibility Scorecard that provides metrics for identifying the biggest opportunities for improvement. Teams then use this to prioritize where to begin and determine what improvements they need to prioritize.

No matter where your project is in its lifecycle, there is likely a lot of work to be done. It is not realistic to think you will be able to tackle it all in one sprint. Building accessible software is not checking off boxes on a list—it requires changing processes and iterating upon them to do better over time. At Truss, we are big believers in making improvements via marginal gains, which is what this process is all about.

The categories in the scorecard cover a range of topics. They include, having access to testing tools, automated testing, training across the team, involving potential users that use assistive tech, involving accessibility standards in acceptance criteria, and having a place where accessibility compliance is logged and reported to the client, such as a Voluntary Product Accessibility Template (VPAT) and/or Accessibility Conformance Report (ACR). We document the metrics and scores, with examples and guidance on how to make improvements in the Truss Accessibility Wiki on the Scorecard page.

Drafting your accessibility plan

You will collect the processes you develop and information your team has in an Accessibility Plan, a living document that you maintain over time to keep track of stakeholders (such as your government agency’s Section 508 program manager), assistive technology targets, the tools you use to do automated and manual testing, what your client wants to see in your compliance report, and more. On my team I am the point person on the accessibility plan, but anyone on my team can contribute and make adjustments to it.

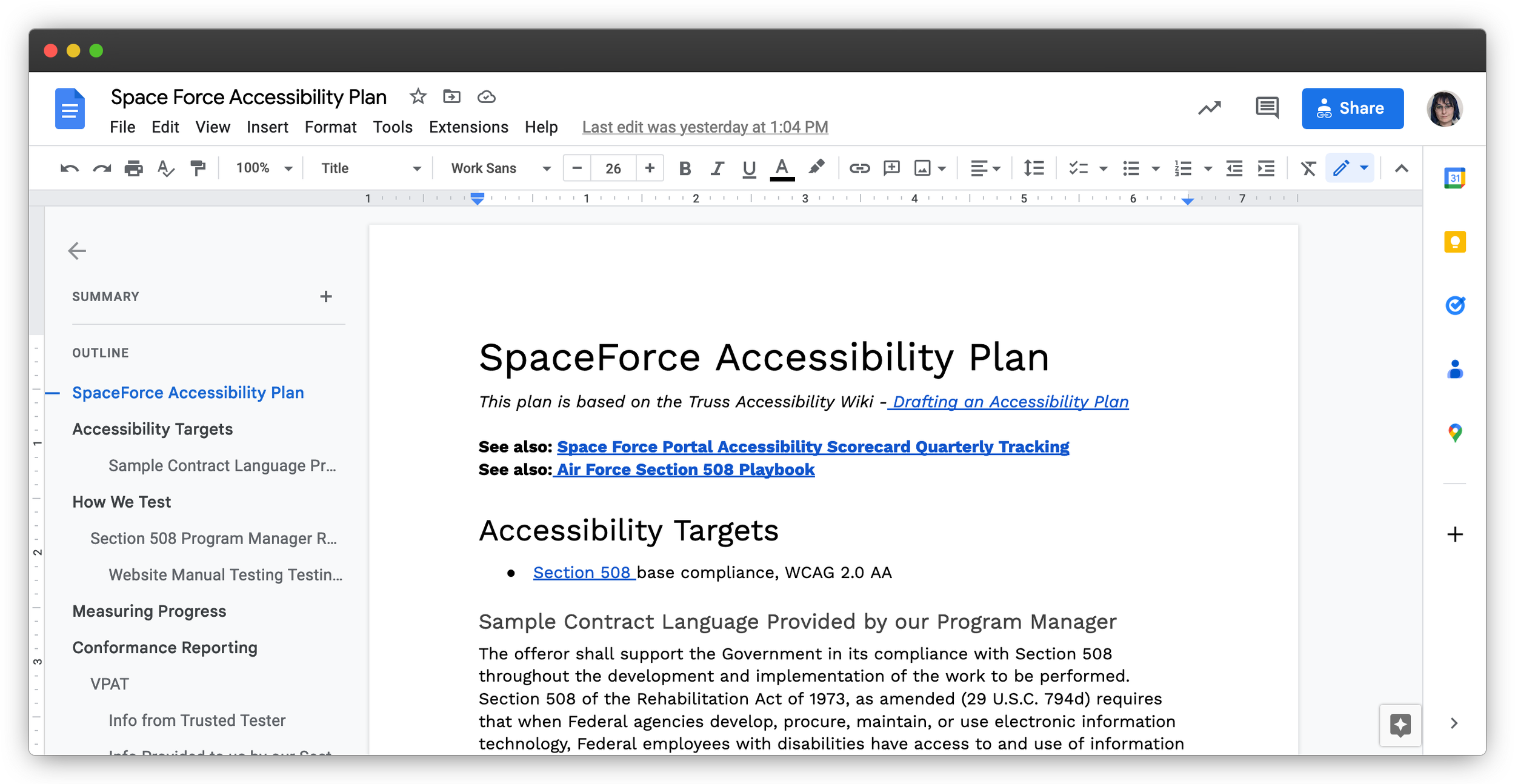

A glimpse of an example of an accessibility plan

I use my accessibility plan to take inventory of what we have accomplished so far in a centralized place, and keep my team in the loop about what we are working towards. And one day, it will make my life a lot easier when I fill out our VPAT, which typically includes a methods section that asks how the team is testing for accessibility and what tools, methods, and frequency was involved.

The accessibility plan can be as simple as a Google doc, but it’s best to stick with the documentation tools your team uses the most, be that Confluence, Notion, Github, etc.

Improving your score and iterating on your plan

The Accessibility Scorecard covers a lot of stuff, and it's ok if your team has a long way to go (most of our teams do, too). The goal of the exercise is to keep improving. Our guild is working towards having each project team fill it out once a quarter, and reporting on what we improved since last quarter. Some quarters, folks might jump four or five points, some maybe one, and some maybe none at all.

The score itself is not the main measure of success, rather the strides a team takes over time. Some of our teams have to be more aggressive, as they are on production contracts with impending go-live dates. Some of us are doing discovery or building MVPs that don’t need to be perfect right away. The philosophy of our accessibility guild at Truss is to focus on improving processes and finding sustainable solutions, rather than simply checking off lines on a VPAT at the end of a project. A lot of these metrics require fundamental process changes, and they won’t stick if you try to apply too many big changes all at once.

Conclusions

This is an ongoing journey at Truss, and it probably will be for your team, too. Even with a fresh start, you’re not going to get everything perfect on your first go, and that’s okay. Hopefully, some of the ideas and resources here can inspire other teams and serve as a resource for making the web a more welcoming place for everyone.

Resources

Truss Accessibility Repo - includes helpful resources such a PR review template and process examples)

Truss Accessibility Wiki - documentation around our resources and process recommendations

Truss Accessibility Scorecard / Template - Accessibility Scorecard documentation and Google Sheets template

Drafting an Accessibility Plan - guidance on making an accessibility plan

Special Thanks to the Trussels aside from myself- past and present, who took the time to develop these materials:

Christine Cheung, Jim Benton, Kim Allen, Josh Franklin, Sai Mohan, Kaleigh Simmons, Liz Lin, Daniel Griffith, Andy Nelson, Suz Rozier, and Hana Worku

And to some of the folks that helped me edit and author this post: Katherine Osos, Joe Kleinschmidt, and Kelsey Listrom