Feedback loops and refinements

This is part of a series discussing our practices and values in the context of unemployment insurance modernization with the US and NJ DOL.

We don’t sell solutions; we solve problems. We ask questions, test out ideas, and iterate until we get it right. Technical issues almost always end up being people issues, so that’s where we put our focus in this modernization project with the two DOLs. Our development and decision making process we covered in the previous post would not be as effective without a tightly integrated cross-functional team. Our person-to-person feedback loops were a key factor in how we delivered on our contract and continued to pursue mastery of process.

Our communication was crucial to setting expectations and pacing work, and it required significant consistent attention. We largely managed to identify and address misunderstandings before they resulted in significant delays or conflicts, and indeed found some places where working across initiatives amplified the value we delivered to the project. Our USDOL liaison and project manager was particularly critical here.

The USDOL staked $2 billion on this modernization initiative, and they set a challenging timeline to meet their goals. At the same time, the NJDOL had its own ongoing modernization efforts. We relied on our process to meet those challenges and coached both the NJ and USDOL in adopting a more modular approach to architecture and an agile approach to delivering it.

Our process

For both the first and second year, we worked in two phases: discovery and framing, and then continuous delivery. In our early phase with New Jersey, we interviewed subject matter experts at NJDOL, their community partners, and claimants. This allowed us to triangulate and more clearly bound the problems to be solved in our work period, while also providing nuance and stories from different perspectives.

From then on we began design and development, using frequent demos and vetting sessions with stakeholders and concept tests with claimants to check our understanding. Those artifacts soon grew into working code and clickable prototypes to test at higher and higher fidelity until we arrived at our current tight refinement loop:

Product definition in a Github ticket

Once project members report a bug or enhancement, all team members have a chance to refine it asynchronously up to a sprint refinement meeting, where it is fully socialized and agreed to be ready for work. Product, design, and engineering often work together at this point to ensure it is defined appropriately from all angles.Rapid design prototyping and vetting/testing in Figma

A designer tagged on a ticket investigates what is needed, gathers any new information, updates Figma, vets with stakeholders who defined it, and tests it with claimants or against past user research. For many of our sprints, we conducted tests in which we compensated participants for their time. The designer then posts their work and specifications to the ticket with more specific acceptance criteria.Agile development, including a design review in Github

Once engineers estimate the level of effort and prioritize it, an engineer assigns themselves to the ticket, completes the work, and makes it available for review. Engineers conduct a technical review while designers conduct a design review, including checking that it matches the visual spec, passes an accessibility audit, and behaves appropriately on all devices and screen sizes. This is fully detailed in our Github PR and issue templates, the former of which is available on our Accessibility repository.Automated testing and deployment to test environments

As engineers push code to Github during the review process, automated tests run to check for code quality assurance, that it meets technical accessibility requirements (i.e. ARIA and Section 508), and that it is deployment-ready. Once approved and merged, our pipeline automatically deploys it to staged deployment environments for the final step.Demos and acceptance testing (and sometimes repeat)

We kept these environments as similar as possible to the final production environment to give stakeholders a stable preview of each change. This made it the ideal place to host demos, user acceptance testing, and usability testing. Depending on the testing outcome, we may close this loop with a successful improvement or run another iteration with a related enhancement or bug ticket.

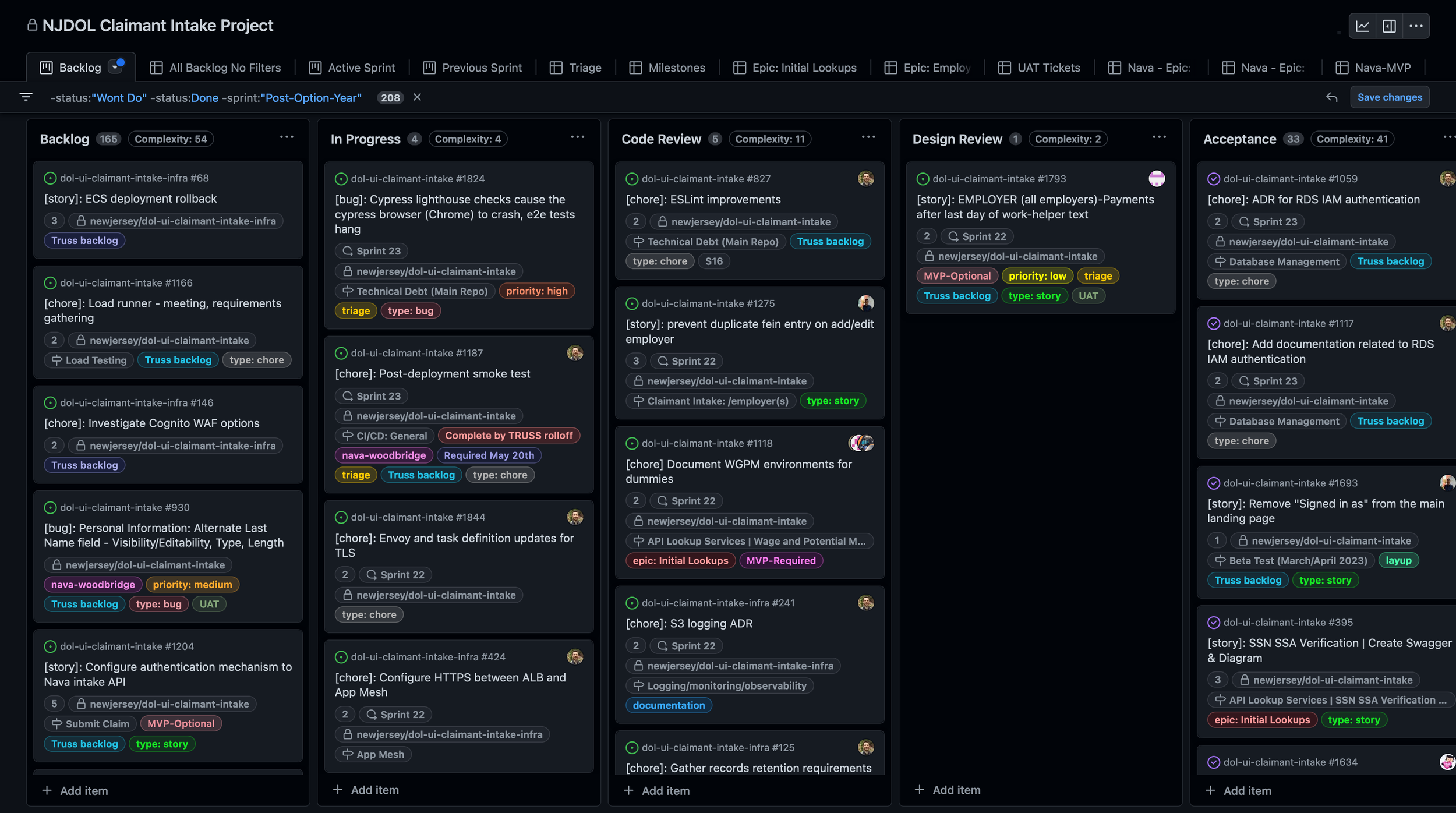

Screenshot of our Github Project board

Like many vendors, we were challenged to help a complex government ecosystem adopt and adapt to this agile methodology. We devoted much of our time to training and mentorship, and it showed in the increased quality and capability of NJDOL. To successfully manage this constraint, we find more success in defining these efforts as goals rather than concrete deliverables. A goal orientation makes space for research and planning, whereas writing concrete deliverables into a contract can incentivize just checking off obligations instead of addressing systemic problems. Starting instead with epic-level user stories facilitates the conversation and points the team in the right direction for discovery and framing. A productive discovery and framing phase leads to a focused, efficient build phase, which results in better delivery.

User Acceptance Testing

The acceptance testing phase of the development feedback loop emerged as a particularly productive domain for building the skills and mindsets requisite for successful technical modernization projects within the NJDOL UI bureaucracy.

All members of the NJDOL team collaborated with Truss on sprint-level user acceptance testing (UAT), and we observed significant shifts. When NJDOL business and policy SMEs participated in testing, they became much more cognizant of the interplay between policy, user experience design, and engineering. We saw them leveraging their subject matter expertise in new and extremely valuable ways, collaborating more effectively with design and engineering teams, and exploring and proposing creative solutions.

Once stimulated, we saw NJDOL colleagues’ perspective and agency gradually broaden. Initially, they would resolve tests in a strictly binary fashion—pass or fail—largely based on how the legacy system worked. (“This works like the legacy system works, so it passes the test,” or “this does not work like the legacy system works, so it fails the test.”)

Over time, their testing resolutions became much more nuanced: a feature would pass a test but not be an ideal business or UX solution, so they would propose and actively promote a more ideal solution. Essentially, NJDOL team members became business analysts and product managers of the highest quality — expert, curious, critical, and passionate.

As the approach to UAT became more fluid and coordinated with each sprint, the testers became much more active and animated in confirming the absolute quality of the work, exploring enhancements, and proposing prioritizations. We predict that UAT at NJDOL will become even more valuable and dynamic as our successor contractor Nava implements their “deployment preview” framework.

“It's an agile approach to building the technology, and it's a human centered approach in how we prioritize what comes next.”

–Jill Gutierrez, director and one of our key stakeholders at NJDOL. Quote reported by FCW.

Can the activity of testing and UAT testing enable critical technical modernization capabilities in State Workforce Agency (SWA) teams? Could this be a means of jump-starting key skills and mindsets among SMEs — building SWA modernization capacity while simultaneously building modernized UI systems? This might be an area for further exploration and experimentation by USDOL UI modernization thought leaders.

And how does this story resonate with you? Is your team trying to modernize how it responds to change or meets evolving user needs? Truss can help. Please let us know what questions you have. We’d be happy to jump on a quick call and open up the conversation.

This is part of a series discussing our practices and values in the context of unemployment insurance modernization with the US and NJ DOL: