Navigating the VPAT Journey: Testing & Reporting for Accessibility Compliance

Recently, the Space Force Portal Team at Truss worked on a previously untouched row in our Accessibility Scorecard: “Project has a VPAT and it is kept up to date.” We had started one long ago at the start of the project, but we hadn’t finished it. This felt like a good area of improvement for our team, so it is the accessibility goal we decided to pursue for Q2 in 2023.

A lot of this process was mysterious to us going in, even for me after specializing in accessibility and inclusive design for most of my career. In the spirit of the Truss Value of Show Up Step Up, I documented the process we went through to get to our end goal of our first VPAT to make is less confusing for someone else facing the same set of open questions, and help more project teams be more proactive about their accessibility testing, reporting, and remediation.

Preview of report with some of the tools used to make it

What’s a VPAT?

If you’re new to this, a VPAT (Voluntary Product Accessibility Template) is a document we as government contractors have an obligation to deliver to our clients in order for any digital product (or as the government likes to call it, ICT: Information and communication technology) procured by the government. This document consists of some high level information about the product, the conditions it was tested under (like browser and operating system) and a list of all the Web Content Accessibility Guidelines (WCAG) and the level of compliance that system meets for each criteria (Section 508 says we need to get AA compliance in each category).

On the Space Force Portal project, we are not quite at a full fledged launch of the portal, but it can be very helpful to test early and get an idea of where you are at to prevent the risk of tech debt and hard to resolve accessibility issues. Our hope to to keep our VPAT updated with each release of the portal to ensure we are building the best experience possible for all Space Force Portal users.

As we got started on this journey, this task seemed pretty daunting! It didn’t seem like there was a quick and easy path to doing this work and there was a lot of information online that didn’t feel actionable, or even conflicted. Even though I already knew a lot about digital accessibility, I decided I wanted to be fully in alignment with the expectations of the federal government, so I went to the source before getting started.

Trusted Tester Methodology

Before starting out testing our platform, I completed the Department of Homeland Security’s Trusted Tester program, at the recommendation of my project’s Section 508 Program Manager. This ensured I had a full understanding of what is expected from us by the government and that I was using the tools and approach that would be used should our worked be checked later on. This also helped me what the process and expectations were when creating a report to deliver to our stakeholders.

https://www.dhs.gov/trusted-tester

This training is free, online and asynchronous, so in the months leading up to doing this work, I chipped away at the coursework in my free time. To earn your certificate, you have to complete a course exam, which consists of auditing a website the platform generates and catching at least 90% of the issues it has. This exercise also helped me understand the edge cases and what I needed to be looking for when conducting testing on the portal. At the end you get a certificate and even a Trusted Tester ID number to put on your reports. So official!

ANDI Testing Tool

The Trusted Tester approach synthesizes all the things you need to look for into about 66 individual tests, many utilizing the tool ANDI (Accessible Name & Description Inspector), also free and provided to us by the government. ANDI is part manual testing aide, part automated testing tool. It will expose the data that would get sent to a screen reader or other assistive technology visually, as well and computing color contrast values and alerting you to potential risks in your code.

https://www.ssa.gov/accessibility/andi/help/install.html

Instructions for how to use ANDI to test for specific things are outlined in detail in the Trusted Tester process, which also is open source and available to anyone whether or not they have completed the certification. I will say though, it’s a lot easier to follow and understand if you have completed the coursework and certification.

Determining Testing Scope

A good starting point when testing a platform is to figure out the scope of what you are testing in the first place. When planning our accessibility testing, we started by creating an application diagram of all the pages and URLs that would be our responsibility to ensure compliance for.

Our application diagram, created in our white boarding software tool

To do this, we went through the app and collected screenshots and url paths that we would need to access during testing. Going on a journey is always much easier when you have a map to follow.

When creating this map, we scoped the testing to both our client app that most users will see, and the CMS (content management system) used to organize and maintain that content.

Trusted Tester Worksheet

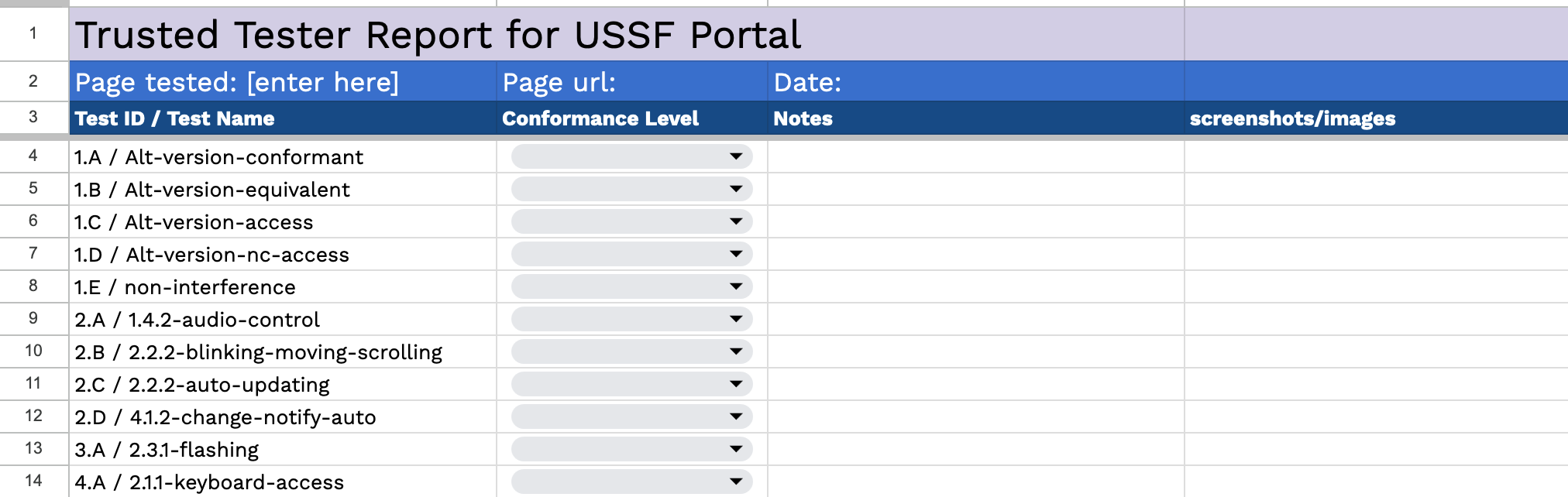

Before beginning our testing, we took all the different Trusted Tester criteria and put them in a spreadsheet (You can use it too - Trusted Tester Worksheet Template in Google Sheets). This sheet was duplicated for each page in the system, as each page had to be individually tested for each criteria. If one page fails for one criteria, it needs to be entered in your report as a failure for that criteria.

different tabs for each page in the worksheet spreadsheet file, to ensure all criteria are tested on each page

Making the spreadsheet allowed us to collect test results at the page level, which we were later able to synthesize into the final report on the whole system.

Spreadsheet with columns for each Trusted Tester criteria, with space for confromance level and notes

The spreadsheet also has a programmed drop down showing the Trusted Tester Conformance level markers (Pass, Fail, Does Not Apply, and Not Tested), an area for notes, and an area to place applicable screenshots for added context.

Checking Applicable Criteria

The next step we took was finding what Trusted Tester criteria applied to each page. Let’s say a test is centered around forms, and there are no forms on that page, we would mark off the tests that apply to forms as “Does Not Apply.”

tests marked with the Does Not Apply option

Before getting into the nitty gritty, we went through all the portal pages and marked off the rows on their pages in the sheet “Does Not Apply” where needed to help break down the tasks. With 18 pages and 66 test criteria each, it was helpful to whittle it down a bit before getting started.

Testing pages

Once we figured out what tests applied where, it was time to start testing! We followed the Trusted Tester Process and filled in the conformance level for each criteria on each page for the entirety of the system.

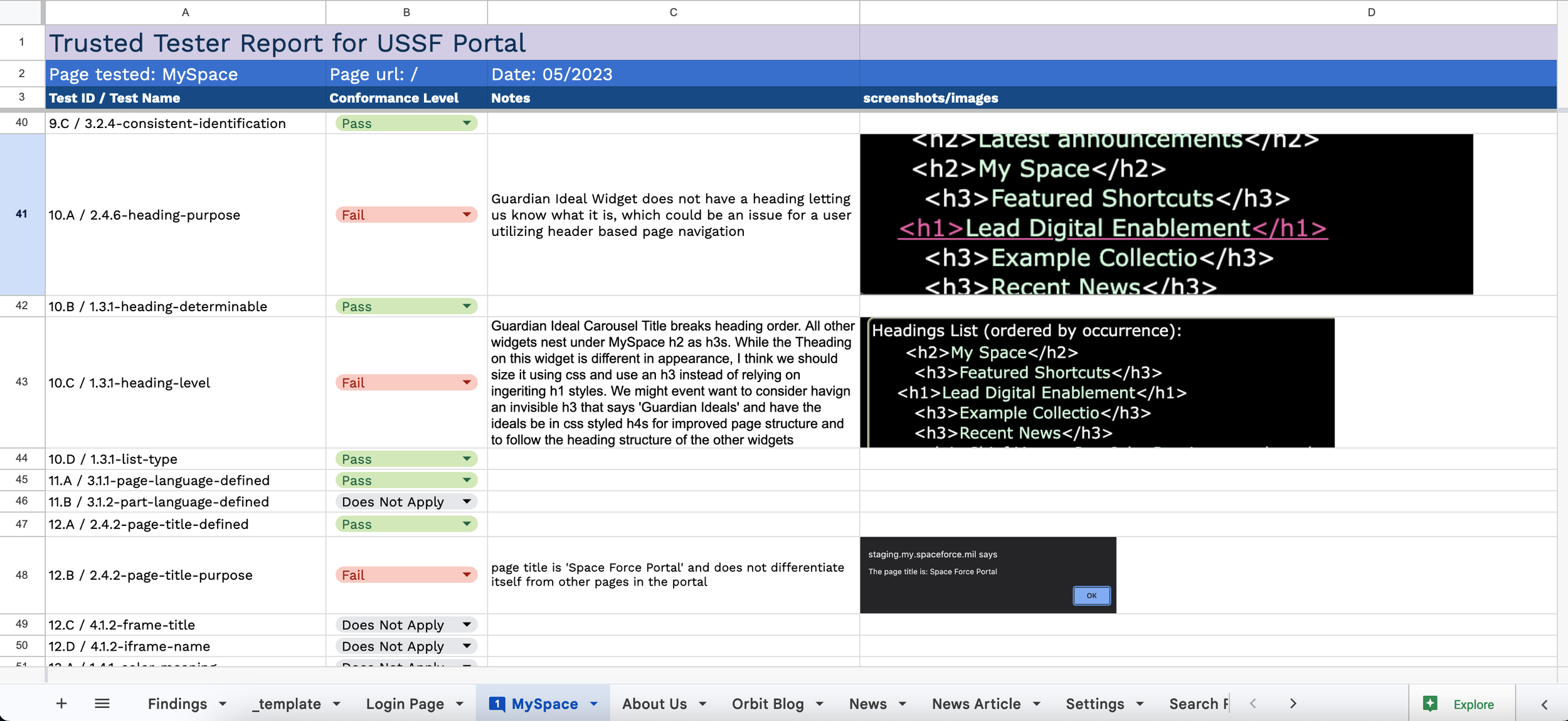

overview of testing results on the MySpace page

As we went through, even though I had completed the training, I made sure to keep the Trusted Tester process reference open to verify I was entering accurate results along the way.

It’s important to remember when doing accessibility testing, that finding issues is not a mark of failure. The issue(s) would have been there either way, but knowing about them means they can be fixed, and ultimately you will build something better. This is why doing testing and filling out a VPAT long before official launch is a great idea.

ACRT Tool

After we collected test results for all the pages in our defined scope, it was time to make use of another helpful tool made for us by the government, the Accessibility Conformance Reporting Tool (ACRT). This tool is downloaded as a zip from Github, and you open the index.html file in your browser to reveal a form showing all the Trusted Tester Criteria, with interactive form fields for you to enter information and upload screenshots. The tool also allows you to save your data as a JSON file you can later load back in to make updates and edits.

View of the Accessibility Conformance Reporting Tool (ACRT)

For each criteria, we went through our sheets pages and brought over any failures we found, along with their data and screenshots. This data can be saved in progress as a JSON file that you can re-import to update an existing report.

At the end of adding in all the data, ACRT generates an HTML page showing your results as a VPAT. It takes all the Trusted Tester criteria and corresponds your results to the correct WCAG criteria and compliance levels for you, which definitely saved a lot of time for us. You can save that HTML page as a PDF by hitting print in your browser and selecting the Save to PDF option.

Our first set of ACRT results!

At first glance, you’ll notice, that we aren’t perfect! The point of testing is to uncover any potential issues, so this is something to celebrate. Also, its worth accounting for all the tests we ran in total. Out of over 1100 total tests ran between the various criteria, and volume of pages, that means less than 2% of those overall test criteria yielded a failure result. Considering we had never conducting testing at this level before, I was pretty impressed with my team and how we have been pretty good about preventing issues in the first place.

What’s next

Once you get done doing your initial VPAT, that doesn’t mean your time doing accessibility testing is done forever! On the ORBIT team, we incorporate accessibility testing of varying approach at different stages of the development process.

We will continue to use automated code checking tools at the commit level using jest-axe. We also have made a plan to utilize ANDI and keyboard testing for every pull request that gets merged into the repository for the portal.

My project’s accessibility plan document, where we collect important compliance information, log our process, and track progress

Our goal is to make updates to our VPAT with each major release we do. From our findings in our initial round of testing, we are working on getting the remediation work into our backlog to ensure fixes are brought in with each release. We hope that each release will come with a VPAT reflecting stronger compliance. As a starting point, at the end of testing, we collected the failures we found and started figuring our whether each issue is better addressed by design or engineering.

The VPAT we generated from ACRT was sent to our Section 508 Program Manager. Each government agency has one, and they are there to support you in your compliance process, track progress, and answer questions. If you work on a project not procured by the government, it is still helpful to make it available to users and potential clients of your product. Outside of the government space, you will often see reports like these called “Accessibility Conformance Reports,” or ACR for short.

We aim to make improvements via marginal gains to keep working to get closer to be fully compliant over time, and prevent future issues with the various approaches in our accessibility plan. This ensures that when we get close to official launch, we aren’t doing a big stressful scramble of testing and remediation to achieve compliance. Building accessible software is much easier if you integrate it into your agile workflows.

Accessibility is not something you address once or look at as an afterthought at the end of the process. By committing to it now, my team is not only reducing potential risk on the project, but ensuring that we really build the best thing possible. So often, accessibility best practices are also design and engineering best practices, too, and all users benefit. We don’t have everything perfect (not yet, anyway!) but this process helps bring us closer.